Artificial intelligence (AI) refers to the simulation of human intelligence in machines that are programmed to think like humans and mimic their actions. The goals of AI research include learning, reasoning, and perception. Major AI research areas include machine learning, which focuses on the development of algorithms that can learn from data and improve their accuracy over time without being explicitly programmed; computer vision, which focuses on processing and analyzing visual images using neural networks; and natural language processing, which focuses on interacting with computers using natural human languages.

Artificial intelligence has a wide range of applications across many industries and fields. In healthcare, AI is used for diagnosis, treatment recommendations, and medical image analysis. In business, AI informs decisions, predicts outcomes, and optimizes processes ranging from supply chain to marketing.

Additionally, AI is used in transportation for autonomous vehicles, in education for automated grading and adaptive learning, in agriculture for monitoring crops and automating tasks, and in gaming for simulations and interactive entertainment. AI has also made significant inroads into the art and photography industry, particularly through the emergence of Nudify — an innovative AI clothes remover application. This tool can harness the power of artificial intelligence algorithms to revolutionize the creation of artwork and imagery, especially in the realm of nude photography.

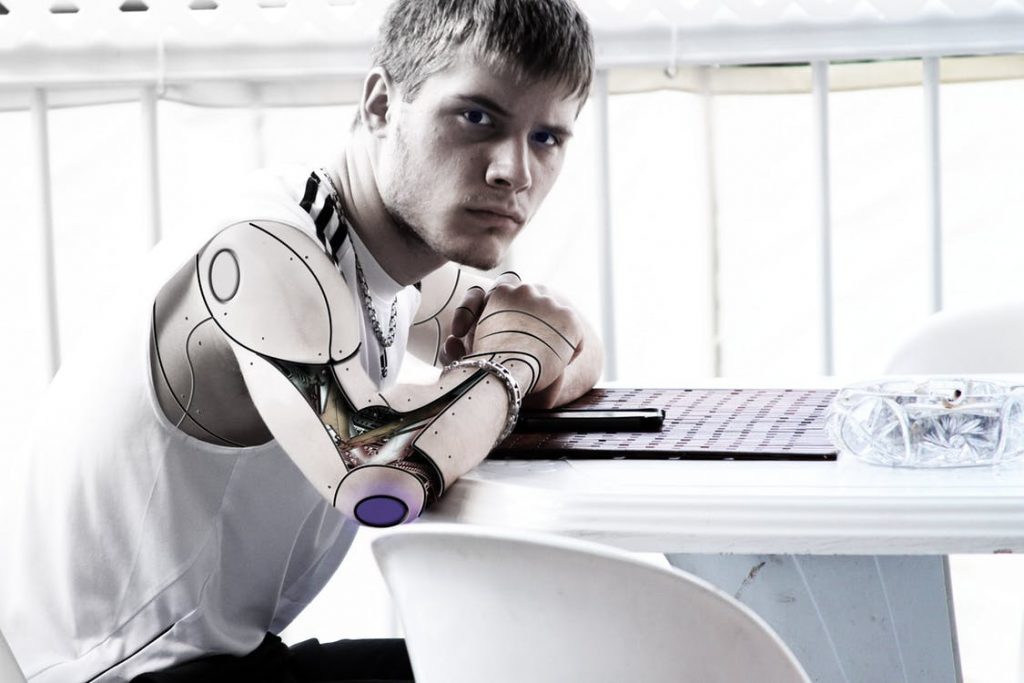

Other common applications of AI include speech recognition, visual perception, language translation, and even generating creative content like music and fashion designs. Even websites like www.tattoosai.com can now be used to generate unique tattoo designs. Algorithms are trained on databases of existing tattoo artwork across different styles to develop deep-learning models. These AI systems can then generate completely new and original tattoo designs based on certain inputs and criteria from the user.

Businesses have increasingly started to use AI in their various processes. AI can help streamline a range of operations and the versatility of its use is something that many companies have started to harness. Software development companies like XAM and other similar firms tend to employ AI in their app development and UI/UX processes. Understanding where to use this technology and create the best version of a product possible is generally the goal, and AI helps speed up the process.

AI has demonstrated remarkable capabilities across many industries, from helping doctors diagnose diseases to allowing websites to generate unique tattoo designs based on a user’s preferences. However, as the technology continues to advance at a rapid pace, it also faces challenges regarding regulation, ethics, and potential risks. There is an ongoing debate about how to govern AI development responsibly while continuing to encourage innovation. There are some rules about AI that you need to know before you start venturing out in this segment.

Most laws about robotics and artificial intelligence (often used interchangeably) are quite contradictory to each other. Let’s look at 9 of these rules about robotics and artificial intelligence that clash with each other and make it challenging to regulate robots and artificial intelligence.

Asimov’s Laws

These three laws concern robotics based on artificial intelligence. They are widely read in the popular culture and are analyzed with equal intensity. The three laws are:

Law 1- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

Law 2- A robot must obey the orders given to it by human beings except for the ones that come in conflict with Law 1.

Law 3- A robot must protect its own existence as long as such protection does not conflict with Law 1 or Law 2.

These laws may make it complicated for machines to handle their actions. Note that there are three types of artificial intelligence- Narrow Intelligence, General Intelligence, and Super Intelligence. These laws will start coming in conflict with each other, as far as a machine is concerned, in handling complicated tasks for the robots or AI systems. Some critics argue that these laws will confuse the robot as he should protect itself and protect all human beings. Additionally, in a condition where a robot should save itself and a human, it would be deeply conflicted as it can neither act nor stand in inaction.

Istvan’s Laws of Transhumanism

There is yet another popular culture reference to AI rules, called Istvan’s laws of transhumanism. Note that these laws are giving the status of omnipotence and independence to the AI race, which coincides with artificial super intelligence concept. The three laws are as follows:

Law 1- A transhumanist must safeguard one’s own existence above all else.

Law 2- A transhumanist must strive to achieve omnipotence as expediently as possible-so long as one’s actions do not conflict with the First Law.

Law 3- A transhumanist must safeguard value in the universe-so long as one’s actions do not conflict with the First and Second Laws.

Tilden’s Laws

There is yet another popular school of thought that controls robotics and the AI that goes into designing these machines. They were proposed by one of the pioneers of robotics, Mark W. Tilden. The laws are as follows:

Law 1- A robot must protect its existence at all costs.

Law 2- A robot must obtain and maintain access to its power source.

Law 3- A robot must continually search for better power sources.

What is common in the laws proposed by Tilden and Istvan is that they are counting robots as a different species or different beings altogether who will work in their own best interests. While these rules may appear scary to some, it is important to note that friendly AI is also a blooming concept where AI is designed solely for human support.